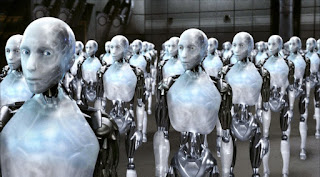

I was talking to a friend of mine about artificial intelligence today and she confessed to me that she is frightened by the thought of it, especially after seeing I, Robot. See, I started working on an algorithm to get from any one point in a maze to another, taking the shortest route possible. It's not an incredibly complex algorithm, but it is a basic form of AI.

My friend didn't entirely understand the concept and was under the impression that I was creating a robot capable of taking over the world. Her first mistake was assuming that AI only pertained to hominids, cyborgs, robots, whatever you want to call them.

AI is simply the act of a program perceiving its environment and choosing actions that will lead to the best outcome. Siri on the iPhone - obviously not a sentient robot - has AI; she learns her owner's interests and preferences, as well as their colloquial language and vocabulary.

My video game, like many other games, has enemies with AI. In fact, almost every game with enemies has some form of AI. From my simple path-finding algorithm, to the most powerful chess computers. Even the enemies in Pacman had a simple AI. The enemies didn't blindly wander around the maze; they chased Pacman and tried to corner him. Fairly impressive for it's time.

My friend assumed that the AI I was programming was capable of killing me and taking over the world though. Slightly paranoid, yes. But it does raise an interesting point about AI. Is it possible for a robot to take over the world?

No. Not unless it was programmed to. Because it is impossible for any machine, AI or not, no matter how advanced it may be, to do any action it was not programmed to do.

Trust me, you can yell at Siri in Pig Latin all you want, she is never going to understand you unless she was programmed to understand Pig Latin.

In the same way; a robot - no matter how sentient - will never do anything it has not been programmed to do. No matter how sentient it appears to be, it is incapable of doing anything other that what it was programmed to do.

Of course, if it was programmed to obey its master no matter what, and that master told it to take over the world, then it would. But in that moment that its master told it to take over the world, its programming changed.

There's a lot of talk about AI and how robots will one day be smarter than humans. That is also impossible. There is no way at all that a creation can be smarter than its creator. For the same reason as before. To learn something, the robot has to be programmed to learn it.

A robot couldn't understand more than its creator because everything it understands had to be programmed by the creator.

Sure, you can tell a robot to learn, but you have to teach it how to learn, what to learn, where to learn. And therefore, anything it can learn, the creator can too.

I don't even want to mention The Terminator or Echelon Conspiracy and the concept of AI becoming self-aware. That is purely science fiction.

I don't even want to mention The Terminator or Echelon Conspiracy and the concept of AI becoming self-aware. That is purely science fiction.

The point I'm making is that AI is not to be feared. It's simply a matter of logic. Robots will never be wiser, smarter, or more capable than humans, and they will never pose a threat to the human race unless they are programmed to do so.

Psychopathic serial killers with PhDs in Computer Science on the other hand; should definitely be feared.

No comments:

Post a Comment